How Edge Computing Is Changing The Way We Learn

#image_title #site_title #post_seo_title #image_seo_title

The world of computing is constantly evolving, and one of the newest and most exciting developments is edge computing. This powerful new technology has the potential to revolutionize the way we use and interact with digital devices, and it’s already starting to make waves in a number of different industries. In this post, we’ll take a closer look at edge computing, exploring what it is, how it works, and why it has the potential to be so transformative.

What is Edge Computing?

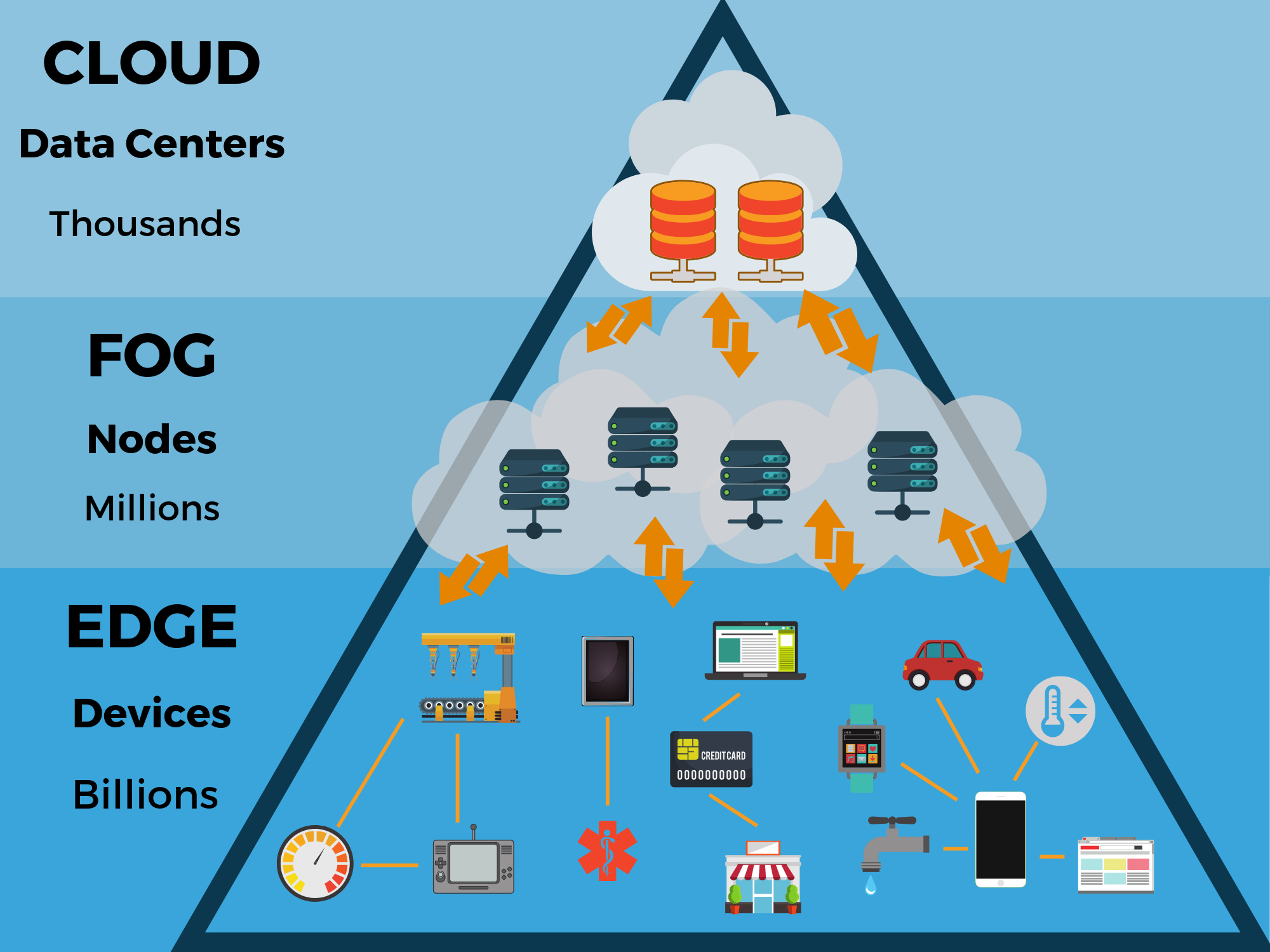

Simply put, edge computing is a way of processing data that takes place at the edge of the network, rather than in a centralized data center. Traditionally, large amounts of data are sent from devices to servers, where they are processed and stored. Edge computing, on the other hand, enables the processing of data to take place closer to the source of that data. This means that information can be analyzed, sorted, and stored locally, rather than being transmitted over long distances to be processed.

Why is Edge Computing Important?

There are a number of different reasons why edge computing is becoming increasingly important. First and foremost, it enables more efficient and effective processing of data. Rather than having to transmit large amounts of information to be processed elsewhere, edge computing can enable that processing to take place in real-time, at the source of the data. This can be particularly important in situations where processing needs to happen very quickly, or where processing large amounts of information at once could put a strain on the network.

But there are other benefits to edge computing as well. By processing data locally, it can be easier to ensure that sensitive data is kept secure. Additionally, edge computing can enable greater flexibility and adaptability in certain types of systems. Because processing is taking place at the edge of the network, it may be easier to modify or upgrade systems as needed, without needing to make major changes to the centralized processing infrastructure.

How Does Edge Computing Work?

So how exactly does edge computing work in practice? There are a number of different ways in which edge computing can be implemented, depending on the specific needs of the system in question. Generally speaking, though, edge computing involves the use of local computing resources, such as servers or edge devices, to process data that is generated nearby.

One common implementation of edge computing involves the use of edge devices, which are small, specialized computers that are placed near the source of the data. These devices are designed to perform specific tasks, such as monitoring sensors or processing data from cameras. Because they’re small and specialized, edge devices can be relatively inexpensive to deploy and maintain.

Abstract

Edge computing is a powerful new technology that has the potential to revolutionize the way we process and interact with data. By enabling processing to take place at the edge of the network, edge computing can help to improve the speed and efficiency of data processing, as well as providing enhanced security and flexibility. Although there are a number of different ways in which edge computing can be implemented, one of the most common approaches involves the use of edge devices, which are small, specialized computers that can be placed near the source of the data.

Introduction

The world of computing is constantly evolving, and one of the latest and most exciting developments is the rise of edge computing. This powerful new technology has emerged as a way to process data locally, at the edge of the network, rather than transmitting data to a centralized data center for processing. While this might sound like a small change, the potential implications of edge computing are truly enormous.

Emerging technologies are transforming the way that we live and work, and edge computing is no exception. In this post, we’ll dive into the world of edge computing, exploring what it is, how it works, and why it is so important. Along the way, we’ll take a look at some of the latest developments in edge computing, as well as some examples of how this technology is already being implemented by businesses and organizations around the world.

Content

At its core, edge computing is a way of processing data that takes place at the edge of the network, rather than in a centralized data center. There are a number of reasons why this can be a superior approach. For example, edge computing can help to improve the speed and efficiency with which data is processed. This is because rather than transmitting data over long distances, it can be processed locally, at the source of that data. This can be particularly important when processing needs to occur in real-time, or when processing large amounts of data could put a strain on the network.

But there are other reasons why edge computing is becoming an increasingly important technology as well. For example, edge computing can help to improve the security of data by enabling sensitive information to be processed locally, rather than being transmitted over the network. Additionally, edge computing can provide greater flexibility and adaptability in certain types of systems.

So, how exactly does edge computing work in practice? There are a number of different ways in which edge computing can be implemented, depending on the specific needs of the system in question. One of the most common approaches to edge computing involves the use of edge devices. These are small, specialized computers that are placed near the source of the data, and which are designed to perform specific tasks. For example, an edge device might be used to monitor a sensor or to process data from a camera. Edge devices can be relatively inexpensive to deploy and maintain, which makes them an attractive option for many businesses and organizations that are looking to implement edge computing.

Of course, deploying edge devices is just one approach to edge computing. There are a number of other ways that this technology can be implemented as well. For example, some organizations might use cloud-based edge computing, in which data is processed in a distributed network of servers rather than in a centralized data center. Others might rely on edge gateways, which serve as the interface between the edge devices and the rest of the network. Regardless of the specific approach used, the overall goal of edge computing is always the same: to provide faster, more efficient processing of data, with greater flexibility and adaptability than traditional centralized data centers.

So, why is edge computing becoming such an important technology today? There are a number of reasons. For one thing, the rise of the Internet of Things (IoT) has led to a massive increase in the amount of data that is being generated and processed by businesses and organizations around the world. As more and more devices become connected to the internet, the need for faster, more efficient processing of all that data is becoming increasingly pressing. Additionally, the rise of artificial intelligence (AI) and machine learning (ML) has led to a need for more powerful processing capabilities that can be deployed closer to the source of the data. Edge computing is ideally suited for meeting these needs, as it provides faster processing, lower latency, and greater security than traditional centralized data centers.

Of course, there are still some challenges associated with edge computing. One of the biggest is ensuring that data is processed securely and reliably. Because edge devices are distributed throughout a network, it can be more difficult to ensure that data is processed in a way that is always secure and protected. Additionally, there are issues associated with data storage and management. Because data is being processed and stored in a distributed fashion, it can be more challenging to manage and organize than it would be in a centralized data center. Nevertheless, as these challenges are addressed, the potential of edge computing is becoming increasingly clear.

Conclusion

Edge computing is a powerful and rapidly-evolving technology that has the potential to transform the world of computing as we know it. By enabling faster, more efficient processing of data, edge computing can help businesses and organizations to be more responsive, more flexible, and more effective in meeting the needs of their customers and clients. As more and more devices become connected to the internet and the amount of data being generated and processed continues to increase, the need for edge computing is only going to grow.

Of course, there are still some challenges associated with edge computing that need to be addressed. As this technology continues to evolve, we can expect to see new solutions emerge to address these challenges and to further improve the capabilities and potential of edge computing. As businesses and organizations around the world continue to explore the possibilities of this exciting new technology, we can be sure that the world of computing is on the cusp of another major transformation.

Source image : www.rtinsights.com

Source image : www.theoryofcomputation.co

Source image : www.iasparliament.com