What Is Edge Computing?

#image_title

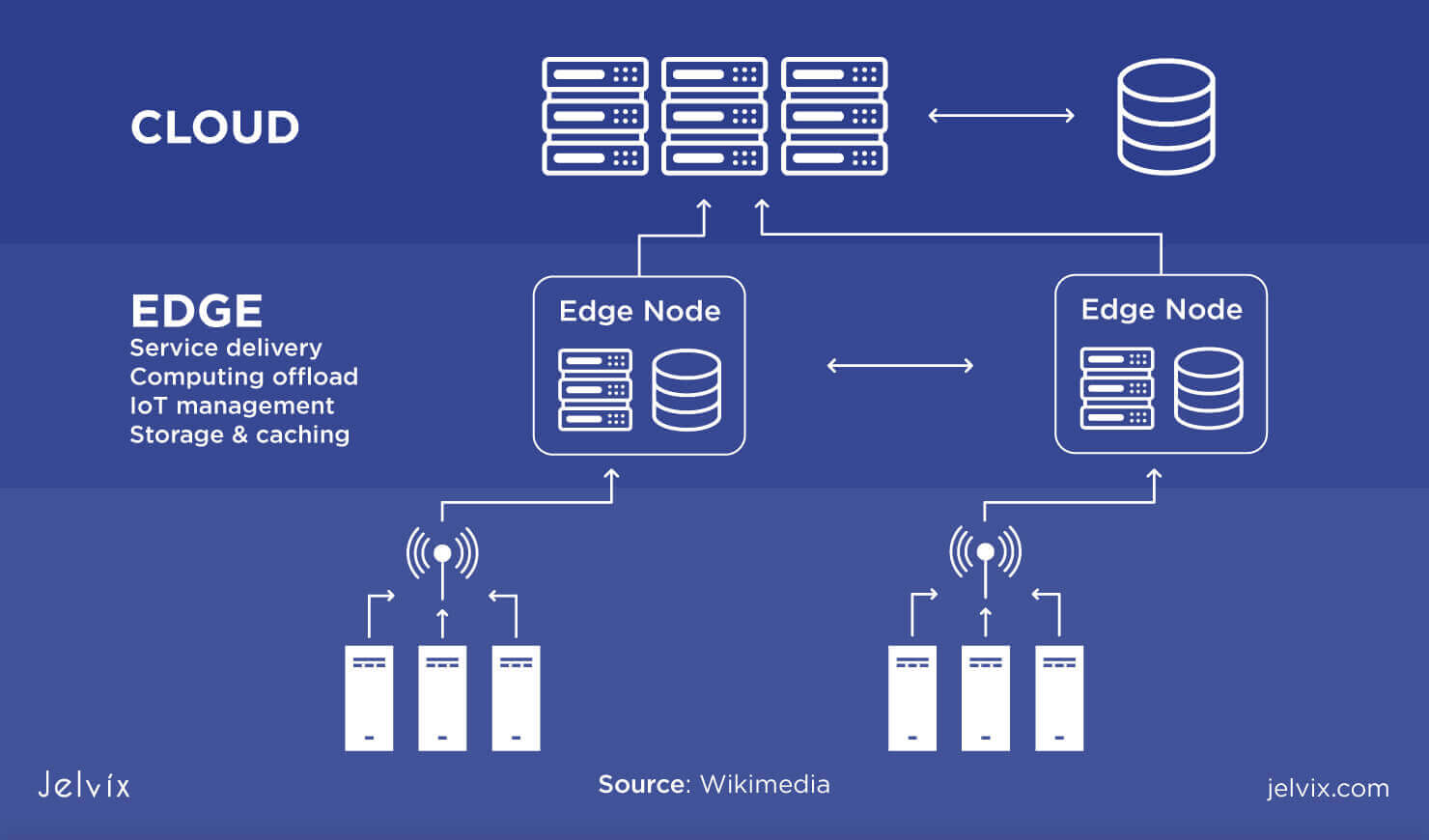

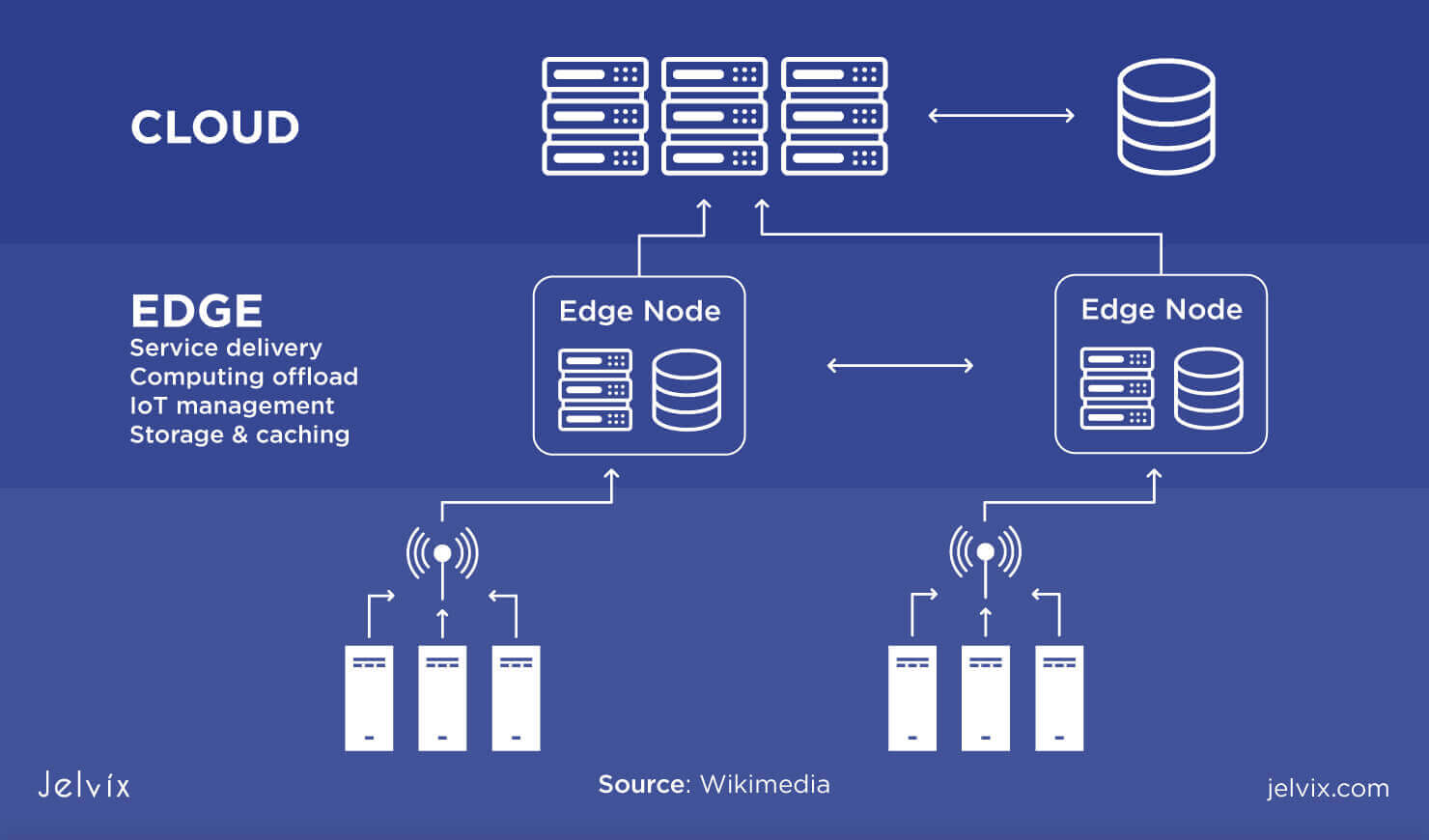

Edge computing is a relatively new concept in the world of technology. It is defined as a way of delivering computing resources closer to the end-user by using devices located near the user. This means that data processing and storage happens closer to the user, rather than through a centralized data center or cloud.

What Is Edge Computing?

Edge computing is a distributed computing model that brings computation and data storage closer to the location where it is needed. In this model, the data is processed and analyzed at the edge of the network, where it is generated, rather than being transferred to the cloud or a centralized data center for processing. This reduces the latency and bandwidth requirements, allowing for faster response times and improved efficiency.

Advantages of Edge Computing

The advantages of edge computing are numerous. First, edge computing allows for faster response times, as the processing of data takes place closer to the source of the data, rather than in a centralized location. This means that tasks that require immediate action, such as factory floor automation or traffic management, can be completed much faster.

Second, edge computing can help in reducing the amount of data sent to the cloud or a centralized data center. This can save on bandwidth costs and reduce latency in data transmission.

Third, edge computing can help in improving the security and privacy of data. By processing data locally, sensitive data is prevented from being sent over public networks, reducing the risk of data breaches.

Challenges of Edge Computing

While edge computing has several advantages, it also has its share of challenges. One of the biggest challenges is the limited processing power and storage capacity of edge devices.

As more data is generated at the edge, the devices need to be capable of processing and analyzing that data in real-time. This requires powerful, specialized hardware that can handle the load. However, this hardware can be expensive and difficult to maintain.

Another challenge is the need for standardization of the edge computing infrastructure. As the technology is relatively new, there is still no standard infrastructure or architecture for edge computing. This can make it difficult for developers to build applications that can run on multiple platforms.

Use Cases for Edge Computing

Edge computing has numerous use cases across various industries. Here are a few examples:

Manufacturing

Edge computing can help in improving the efficiency of manufacturing operations. By installing IoT devices on factory floors, manufacturers can collect real-time data about machine performance, production volumes, and quality control. This data can be analyzed locally, resulting in faster response times and better decision-making.

Healthcare

Edge computing can be a game-changer in the healthcare industry. By installing IoT devices in hospitals and clinics, doctors can monitor patient health in real-time. This can help in early detection of diseases and improve patient outcomes. Additionally, edge computing can help in reducing the workload of healthcare workers by automating routine tasks such as monitoring vital signs and medication reminders.

Retail

Edge computing can help retailers in improving the customer experience. By analyzing customer data in real-time, retailers can personalize the shopping experience for each customer. Additionally, retailers can use the data to optimize their store layouts and product selections, resulting in higher sales.

Conclusion

Edge computing is a revolutionary technology that has the potential to transform the way we live and work. It brings computing resources closer to the user, resulting in faster response times, improved efficiency, and better security. While there are challenges to overcome, the advantages of edge computing make it an exciting technology to watch in the coming years.

Source image : jelvix.com

Source image : contenteratechspace.com

Source image : www.cbinsights.com